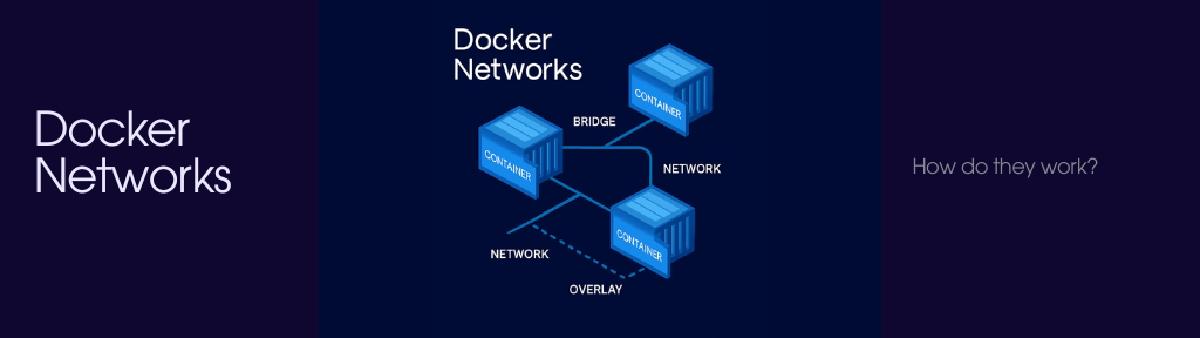

This lesson focuses on the behaviour and types of docker networking

Reference: https://docs.docker.com/engine/network/drivers/

What are docker networks#

Refers to how containers and host systems can communicate amongst each other to facilitate the different protocols like tcp and udp.

Network interface#

- IP Address

- Gateway

- Routing table

- DNS Service

- other

We will see networking from the containers point of view

Containers will attach to the default-bridge network if non specified, or they can connect to a user defined network if specified.

Connecting a container to a network can be compared to connecting an Ethernet cable to a physical host. Just as a host can be connected to multiple Ethernet networks, a container can be connected to multiple Docker networks.

For example, a frontend container may be connected to a bridge network with external access, and a --internal network to communicate with containers running backend services that do not need external network access.

A container may also be connected to different types of network. For example, an ipvlan network to provide internet access, and a bridge network for access to local services.

Published Ports and Mapping#

This allows access to services running of specific ports. They bind the container port to the host port.

From the host -> Container, only exposed ports can be accessed

From the contianer -> other containers, only services in the same network can be accessed.

Use the --publish / -p <host-port>:<container-port> to expose services from container to the host.

This creates a firewall rule in the host, mapping a container port to a port on the Docker host to the outside world. Here are some examples:

| Flag value | Description |

|---|---|

-p 8080:80 | Map port 8080 on the Docker host to TCP port 80 in the container. |

-p 192.168.1.100:8080:80 | Map port 8080 on the Docker host IP 192.168.1.100 to TCP port 80 in the container. |

-p 8080:80/udp | Map port 8080 on the Docker host to UDP port 80 in the container. |

-p 8080:80/tcp -p 8080:80/udp | Map TCP port 8080 on the Docker host to TCP port 80 in the container, and map UDP port 8080 on the Docker host to UDP port 80 in the container. |

| Direct routing to containers in bridge networks |

This can also be setup such that if you,

Create a network where published ports on container IP addresses can be accessed directly from interfaces vxlan.1 and eth3:

docker network create --subnet 192.0.2.0/24 --ip-range 192.0.2.0/29 -o com.docker.network.bridge.trusted_host_interfaces="vxlan.1:eth3" mynet

Run a container in that network, publishing its port 80 to port 8080 on the host’s loopback interface:

docker run -d --ip 192.0.2.100 -p 127.0.0.1:8080:80 nginx

The web server running on the container’s port 80 can now be accessed from the Docker host at http://127.0.0.1:8080, or directly at http://192.0.2.100:80. If remote hosts on networks connected to interfaces vxlan.1 and eth3 have a route to the 192.0.2.0/24 network inside the Docker host, they can also access the web server via http://192.0.2.100:80.

Gateway#

| Gateway mode | What it means (simple) | When to use |

|---|---|---|

| nat (default) | Containers access external networks through NAT, Docker rewrites IPs | Normal setups, laptops, most servers |

| bridge | Containers talk directly on a Linux bridge, minimal translation | When you want simpler internal routing |

| transparent | Containers appear directly on the physical network with real IPs | Advanced setups, network appliances |

| isolated | No external network access at all | Security, sandboxing, testing |

| host | Container shares host’s network stack | High performance, no port mapping needed |

Addressing and Subnetting#

Addressing#

(only covering ipv4 addressing).

Unless a specific IP address is not mentioned by using --ip flag, docker automatically assings the IP address from the subnet of the network.

Default assignment can be turned off using the ipv4=false when creating the netwroks.

Creating a network is done by

docker network create <network-name>You can connect a container to networks using the --networks command.

or

docker network connect --alias can be used to specify the network alias for the container in the networks (can be more than 1)

Subnet Allocation#

Usually handled by docker by choosing from default pools

or

Create manually:

docker network create --ipv6 --subnet 192.0.2.0/24 --subnet 2001:db8::/64 mynetDefault pools:

{

"default-address-pools": [

{"base":"172.17.0.0/16","size":16},

{"base":"172.18.0.0/16","size":16},

{"base":"172.19.0.0/16","size":16},

{"base":"172.20.0.0/14","size":16},

{"base":"172.24.0.0/14","size":16},

{"base":"172.28.0.0/14","size":16},

{"base":"192.168.0.0/16","size":20}

]

}Docker internally manages these networks to make sure they dont overlap

DNS#

Shares dns with host device unless using the --dns flag

By default, containers inherit the DNS settings as defined in the /etc/resolv.conf configuration file. Containers that attach to the default bridge network receive a copy of this file. Containers that attach to a custom network use Docker’s embedded DNS server. The embedded DNS server forwards external DNS lookups to the DNS servers configured on the host.

--hostname The hostname a container uses for itself. Defaults to the container’s ID if not specified. (so you can ping hostname instead of IP address)

Network Drivers#

Docker Engine has a number of network drivers, as well as the default “bridge”. On Linux, the following built-in network drivers are available:

| Driver | Description |

|---|---|

| bridge | The default network driver. |

| host | Remove network isolation between the container and the Docker host. |

| none | Completely isolate a container from the host and other containers. |

| overlay | Swarm Overlay networks connect multiple Docker daemons together. |

| ipvlan | Connect containers to external VLANs. |

1. Bridge#

Behaviour#

Each container gets its own IP from the bridge subnet

Containers on the same bridge can communicate freely

Containers on different bridges are isolated unless ports are published

Uses NAT (masquerading) for outbound internet access

Supports port publishing to expose container ports outside the host

Default bridge:

No automatic DNS by container name

All containers join it unless

--networkis specifiedConsidered legacy, not recommended for production

User-defined bridge:

Automatic DNS name resolution between containers

Better isolation between application stacks

Containers can be attached or detached at runtime

Each network has its own configurable settings

Usage Commands#

Create a user-defined bridge

docker network create my-netRun a container on a bridge network

docker run --network my-net nginxPublish a port

docker run -p 8080:80 --network my-net nginxConnect a running container to a network

docker network connect my-net my-containerDisconnect a container from a network

docker network disconnect my-net my-containerRemove a bridge network

docker network rm my-net

Usage Scenarios#

Multi-container applications (web + database) on a single host

Isolating different projects or environments (dev, test, prod)

Secure internal communication without exposing services externally

Local development and Docker Compose based setups

Situations where container name–based service discovery is needed

Key takeaway:

Use user-defined bridge networks for almost all real-world Docker applications. The default bridge is mainly for backward compatibility and quick tests.

2. Host#

Behavior#

Container shares the host’s network stack

No separate container IP is assigned

Container binds directly to host ports

Port publishing (

-p,--publish) is ignoredNo NAT, no userland proxy, very high performance

Network isolation is removed, but:

- Filesystem, process, user isolation still exist

Only one container can bind to a given port per host

On Docker Desktop:

Works at TCP/UDP (Layer 4) only

Linux containers only

Must be explicitly enabled

Usage Commands#

Run a container with host networking

docker run --network host nginxStart a service listening on host ports

docker run --rm -it --net=host nicolaka/netshoot nc -lkv 0.0.0.0 8000Access host services from inside container

docker run --rm -it --net=host nicolaka/netshoot nc localhost 80Use with Docker Swarm service

docker service create --network host nginx

Usage Scenarios#

High-performance networking (low latency, high throughput)

Applications that need many ports or dynamic ports

Network debugging and diagnostics

Monitoring agents and packet analyzers

Services that should behave like native host processes

When NAT and port mapping overhead must be avoided

Key takeaway:

Host networking removes network isolation for maximum performance. Use it carefully, it behaves almost like running the application directly on the host.

3. None#

If you want to completely isolate the networking stack of a container, you can

use the --network none flag when starting the container. Within the container,

only the loopback device is created.

The following example shows the output of ip link show in an alpine

container using the none network driver.

$ docker run --rm --network none alpine:latest ip link show

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

No IPv6 loopback address is configured for containers using the none driver.

$ docker run --rm --network none --name no-net-alpine alpine:latest ip addr show

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

4. IPvLAN#

Behaviour#

Containers get their own IP address on the network

Uses the host’s network interface directly (no Linux bridge)

No NAT by default, traffic is routed directly

Very low overhead and high performance

Containers appear as real devices on the network

Two modes:

L2 mode: containers are on the same Layer 2 network as the host

L3 mode: containers are routed, not bridged (more isolation)

Containers cannot communicate with the host by default (security feature)

No automatic DNS like user-defined bridge networks

Usage Commands#

Create an IPvlan network (L2 mode)

docker network create -d ipvlan \ --subnet=192.168.1.0/24 \ --gateway=192.168.1.1 \ -o parent=eth0 ipvlan-netRun a container on IPvlan

docker run --network ipvlan-net nginxCreate IPvlan in L3 mode

docker network create -d ipvlan \ --subnet=192.168.100.0/24 \ -o ipvlan_mode=l3 \ -o parent=eth0 ipvlan-l3

Usage Scenarios#

Data centers and bare-metal servers

High-performance networking workloads

Containers that must appear as real hosts on the LAN

Avoiding bridge overhead and NAT

Environments with strict network policies

When MAC address exhaustion is a concern (IPvlan uses fewer MACs than Macvlan)

Key takeaway:

IPvlan gives containers real IPs with near-native performance, while keeping better control and scalability than Macvlan. Use it when you want containers to integrate cleanly into existing networks without bridge complexity.

5. Overlay#

Behaviour#

Connects containers across multiple Docker hosts

Built on top of VXLAN tunneling

Containers get unique virtual IPs

Built-in service discovery and DNS

Encrypted traffic support (optional)

Works only when Docker Swarm is enabled

Network isolation across different application stacks

Uses an overlay on top of the host’s physical network

Usage Commands#

Initialize Swarm

docker swarm initCreate an overlay network

docker network create -d overlay my-overlayCreate encrypted overlay network

docker network create -d overlay --opt encrypted my-secure-overlayRun a service on overlay network

docker service create --name web --network my-overlay nginx

Usage Scenarios#

Multi-host Docker deployments

Microservices spread across servers

Docker Swarm clusters

Secure inter-service communication

Distributed applications without manual routing

Key takeaway:

Overlay networks make containers on different machines behave as if they are on the same private network, which is essential for multi-host container orchestration.

6. MacVLAN#

Behaviour#

Containers get their own MAC address and IP address

Containers appear as separate physical devices on the network

No NAT or bridge, traffic goes directly to the physical network

Very high performance, near-native networking

By default, containers cannot communicate with the host

Requires network switches to allow multiple MAC addresses per port

Less scalable than IPvlan due to MAC address usage

Usage Commands#

Create a Macvlan network

docker network create -d macvlan \ --subnet=192.168.1.0/24 \ --gateway=192.168.1.1 \ -o parent=eth0 macvlan-netRun a container on Macvlan

docker run --network macvlan-net nginxCreate a Macvlan network with a custom range

docker network create -d macvlan \ --subnet=192.168.1.0/24 \ --ip-range=192.168.1.128/25 \ -o parent=eth0 macvlan-net

Usage Scenarios#

Legacy applications needing real MAC and IP

Network appliances and monitoring tools

Containers that must be visible on the LAN

Environments where NAT and port mapping are not allowed

Labs, on-prem networks, and bare-metal servers

Key takeaway:

Macvlan makes containers behave like physical machines on the network, but requires careful network planning due to MAC address and host-communication limitations.